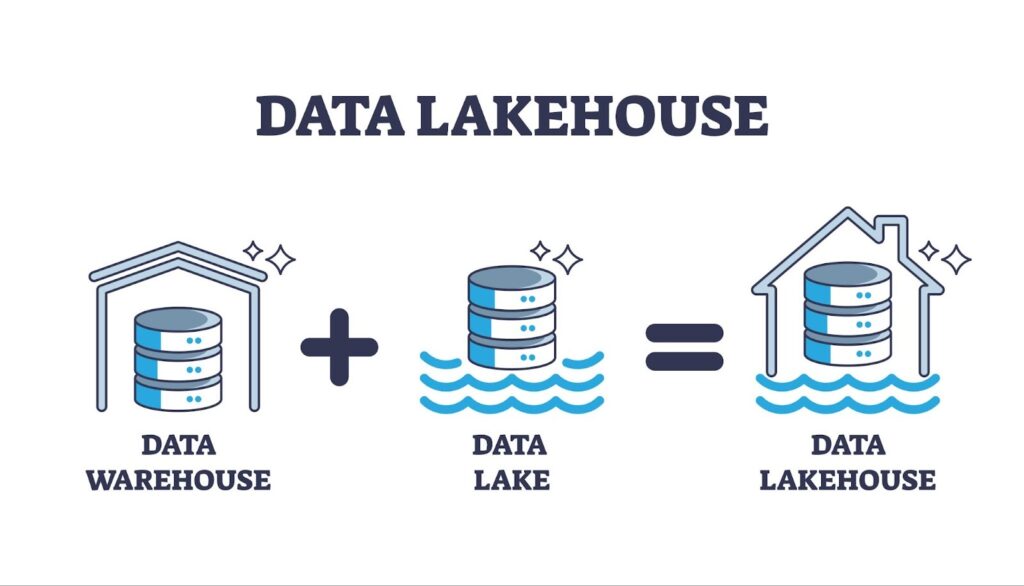

Organizations today generate huge volumes of data from applications, sensors, transactions, and customer interactions. Traditionally, this data was split between data warehouses for structured analytics and data lakes for raw, unstructured information, but each comes with limitations. Warehouses are expensive and rigid, while lakes can become messy and unreliable. The data lakehouse was created to solve this problem by combining the low-cost storage and flexibility of a data lake with the performance, governance, and reliability of a warehouse.

A lakehouse is ideal when your data is diverse and growing quickly. If you work with a mix of structured tables, logs, IoT streams, images, or unstructured content, a data warehouse alone can’t easily handle that variety. Lakehouses allow all data types to live in one place while still supporting fast SQL queries and strong governance. They’re especially useful for organizations that need both traditional BI reporting and machine learning in the same environment. Analysts, data engineers, and data scientists can all work from the same system without creating duplicate pipelines or siloed datasets.

Cost efficiency is another major reason to choose a lakehouse. Since lakehouses rely on affordable cloud object storage, they offer a more scalable and budget-friendly approach than storing everything in a warehouse. They also separate compute from storage, letting you use multiple engines and scale resources as needed, which is ideal for teams looking to reduce long-term cloud costs.

Lakehouses also shine when reliability and real-time data are important. They support ACID transactions, schema enforcement, versioning, and streaming ingestion, capabilities that make ETL pipelines far more dependable than traditional data lakes. Manufacturers, logistics companies, retailers, and financial institutions often choose lakehouses to combine streaming data with batch analytics for real-time insights.

Open formats are another advantage. Because lakehouses store data in formats like Parquet or ORC, organizations avoid vendor lock-in and keep their data portable. This flexibility makes lakehouses a strong long-term architectural choice.

However, a lakehouse may not be necessary if your organization is small and only uses structured data. In those cases, a cloud data warehouse alone may be sufficient.

In the end, you should use a data lakehouse when you need a unified, scalable, cost-efficient environment that supports diverse data, reliable pipelines, and both analytics and machine learning. For growing, data-driven organizations, it offers a powerful, future-ready foundation.

How FocustApps Can Help

If you’re curious about how to best manage data for your organization, contact our team of experts at FocustApps. Our team excels at implementing data analytics and AI solutions. For more information, check out our case studies!